To select,filter and sort a data frame in spark using R

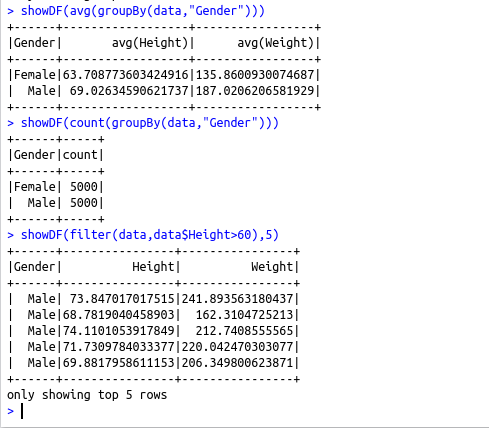

groupBy(data,”Colname”) – To group the data frame based on a column

filter(data,condition) – To filter a data frame

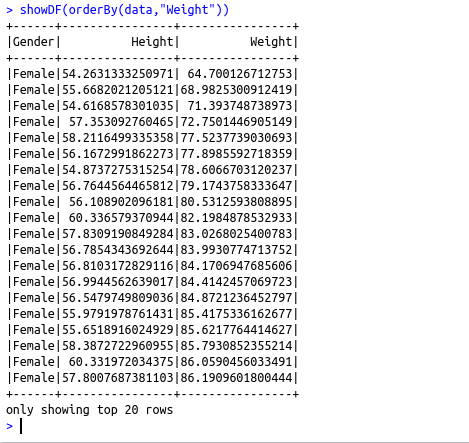

orderBy(data,”Colname”) – Sort data frame by the specified columns

arrange(data,”Colname”,decreasing=FALSE) – Sort data frame by the specified columns in ascending or descending order

select(data,”Colname”) – select a set of columns with names or Column expressions

library(sparklyr)

#Set up spark home

Sys.setenv(SPARK_HOME=”/…/spark-2.4.0-bin-hadoop2.7″)

.libPaths(c(file.path(Sys.getenv(“SPARK_HOME”), “R”, “lib”), .libPaths()))

#Load the library

library(SparkR)

#Initialize the Spark Context

#To run spark in a local node give master=”local”

sc #Start the SparkSQL Context

sqlContext 60),5)

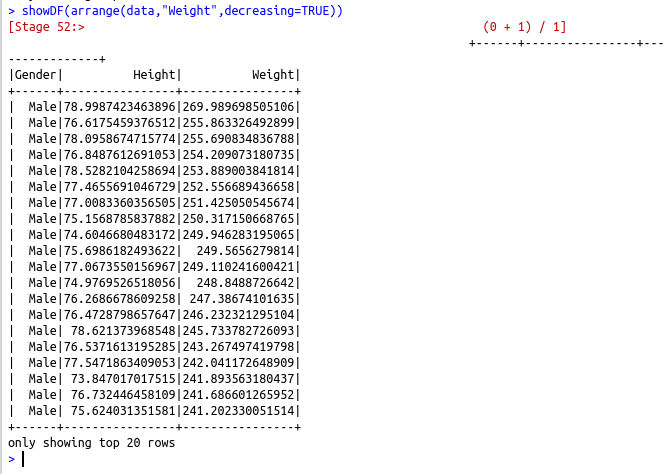

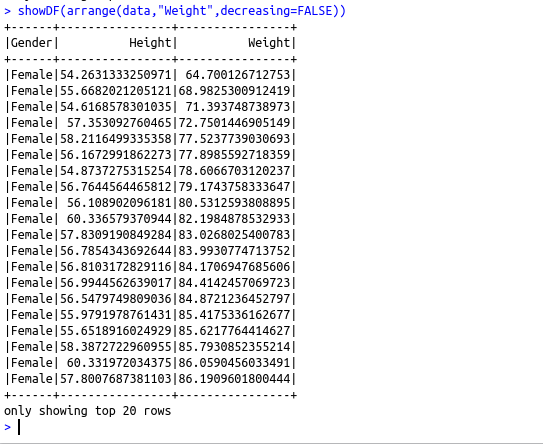

#Sort dataframe by the specified columns

showDF(orderBy(data,”Weight”))

showDF(arrange(data,”Weight”,decreasing=FALSE))

showDF(arrange(data,”Weight”,decreasing=TRUE))

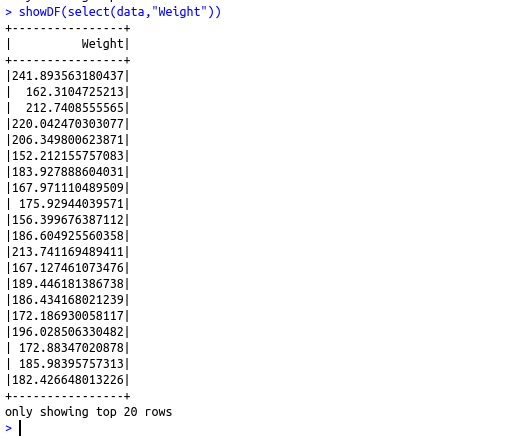

#select a set of columns with names or Column expressions

showDF(select(data,”Weight”))