To implement the concept of decision tree classifier using python.

#import libraries

import pandas as pd

import warnings

warnings.filterwarnings(“ignore”)

import numpy as np

import seaborn as sns

import matplotlib.pyplot as plt

from sklearn.tree import DecisionTreeClassifier

from sklearn import tree

import pydotplus

from sklearn.model_selection import train_test_split

from sklearn import metrics

sns.set(style=”ticks”,color_codes=True)

#load data set URL

url = “https://archive.ics.uci.edu/ml/machine-learning-databases/iris/iris.data”

names = [‘sepal-length’, ‘sepal-width’, ‘petal-length’, ‘petal-width’, ‘class’]

data = pd.read_csv(url, names=names)

df = pd.DataFrame(data)

#checking missing values

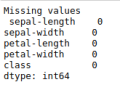

print(“Missing values\n”,df.isnull().sum())

print(“\n”)

#Descriptive statistics

print(“Descriptive statistics\n”,df.describe())

X = df.drop(‘class’,1)

y = df[‘class’]

#checking outliers

print(“\n”)

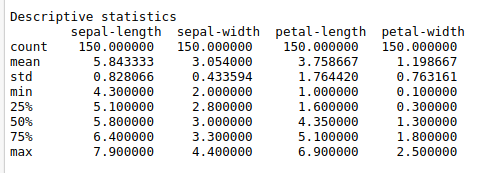

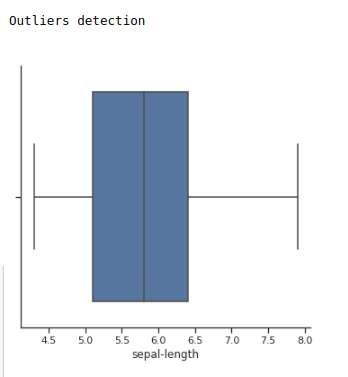

print(“Outliers detection\n”)

sns.catplot(x=”sepal-length”, kind=”box”, data=df);

plt.show()

sns.catplot(x=”sepal-width”, kind=”box”, data=df);

plt.show()

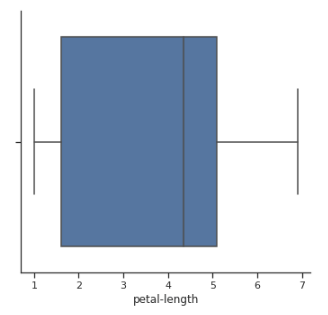

sns.catplot(x=”petal-length”, kind=”box”, data=df);

plt.show()

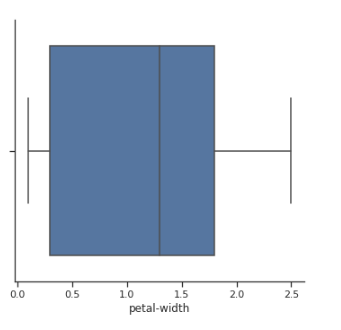

sns.catplot(x=”petal-width”, kind=”box”, data=df);

plt.show()

print(“\n”)

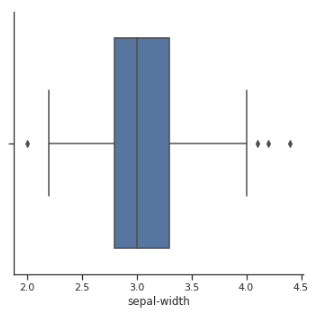

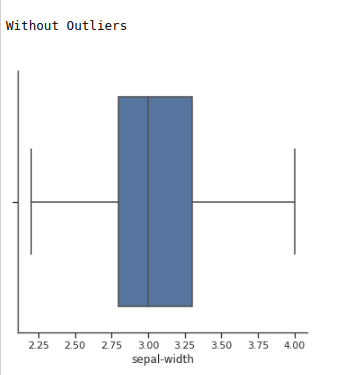

#Removing outliers

print(“Without Outliers\n”)

median = df.loc[df[‘sepal-width’]>=2, ‘sepal-width’].median()

df.loc[df[‘sepal-width’] <=2, ‘sepal-width’] = np.nan

df.fillna(median,inplace=True)

median = df.loc[df[‘sepal-width’]<4, ‘sepal-width’].median() df.loc[df[‘sepal-width’] > 4, ‘sepal-width’] = np.nan

df.fillna(median,inplace=True)

sns.catplot(x=”sepal-width”, kind=”box”, data=df);

plt.show()

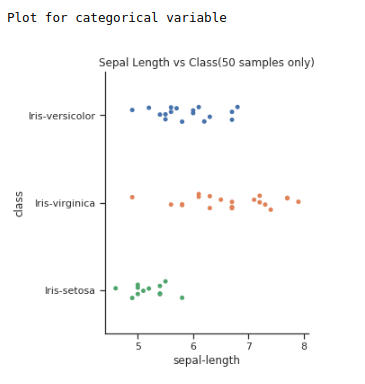

#categorical scatter plot for each independent variable

print(“Plot for categorical variable\n”)

df1 = df.sample(50)

sns.catplot(x=”sepal-length”, y=”class”, data=df1, marker=’o’);

plt.title(“Sepal Length vs Class(50 samples only)”)

plt.show()

print(“\n”)

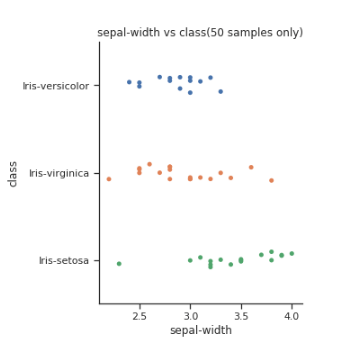

df1 = df.sample(50)

sns.catplot(x=”sepal-width”, y=”class”, data=df1, marker=’o’);

plt.title(“sepal-width vs class(50 samples only)”)

plt.show()

print(“\n”)

df1 = df.sample(50)

sns.catplot(x=”petal-length”, y=”class”, data=df1, marker=’o’);

plt.title(“petal-length vs class(50 samples only)”)

plt.show()

print(“\n”)

df1 = df.sample(50)

sns.catplot(x=”petal-width”, y=”class”, data=df1, marker=’o’);

plt.title(“petal-width vs class(50 samples only)”)

plt.show()

print(“\n”)

#split the data train and test

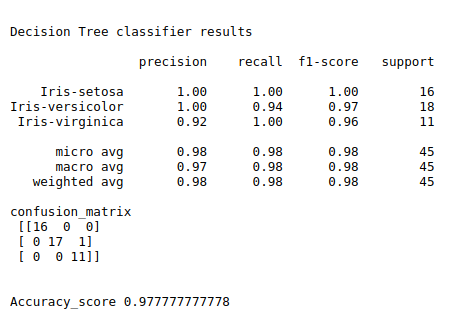

print(“Decision Tree classifier results\n”)

#split data train and test

X_train, X_test, y_train, y_test = train_test_split(X, y, test_size=0.3, random_state=0)

classifier = DecisionTreeClassifier()

classifier.fit(X_train, y_train)

y_pred = classifier.predict(X_test)

print(metrics.classification_report(y_test, y_pred))

print(“confusion_matrix\n”,metrics.confusion_matrix(y_test, y_pred))

print(“\n”)

print(“Accuracy_score”,metrics.accuracy_score(y_test, y_pred))

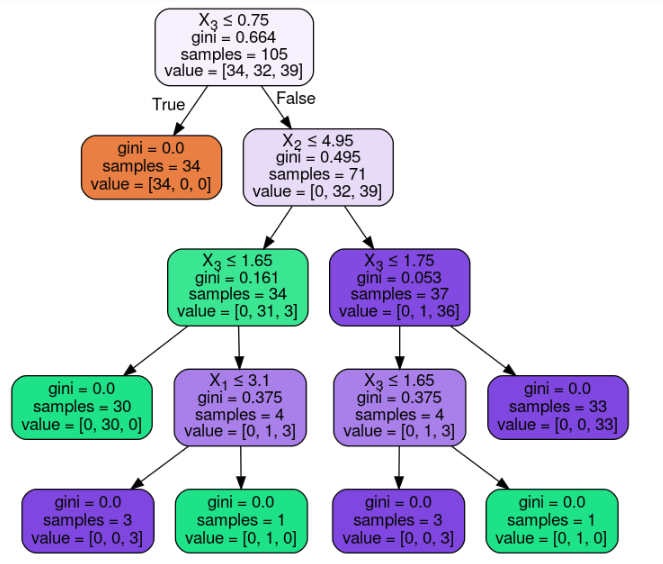

#decision tree

from sklearn.externals.six import StringIO

from IPython.display import Image

from sklearn.tree import export_graphviz

import pydotplus

dot_data = StringIO()

export_graphviz(classifier, out_file=dot_data,

filled=True, rounded=True,

special_characters=True)

graph = pydotplus.graph_from_dot_data(dot_data.getvalue())

Image(graph.create_png())