Research breakthrough possible @S-Logix

pro@slogix.in

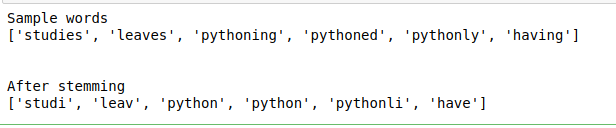

To understand how to do word stemming in natural language processing using nltk library.

Sample text

Import necessary libraries.

Took sample text.

Build portstemmer constructor.

Fit the text data into the constructor.

Stem the words.

Print the results.

from nltk.stem import PorterStemmer

#sample words

example_words=[“studies”,”leaves”,”pythoning”,”pythoned”,”pythonly”,”having”]

print(“Sample words”)

print(example_words)

print(“\n”)

#stemmer constructor

ps = PorterStemmer()

re = []

for i in example_words:

re.append(ps.stem(i))

print(“After stemming”)

print(re)