To implement pipeline architecture for sentiment analysis in spark with R using sparklyR package

spark_connect(master = “local”) – To create a spark connection

sdf_copy_to(spark_connection,R object,name) – To copy data to spark environment

sdf_partition(spark_dataframe,partitions with weights,seed) – To partition spark dataframe into multiple groups

ml_pipeline(spark connection) – To create a Spark ML pipeline

ft_r_formula(formula) – To implement the transforms required for fititng a dataset against an R model formula

ml_random_forest_classifier() – To build a random forest model

ml_fit(pipeline_model,train_data) – To fit the model

ml_transform(fitted_pipeline,test_data) – To predict the test data

#Load the sparklyr library

library(sparklyr)

#Create a spark connection

sc #Copy data to spark environment

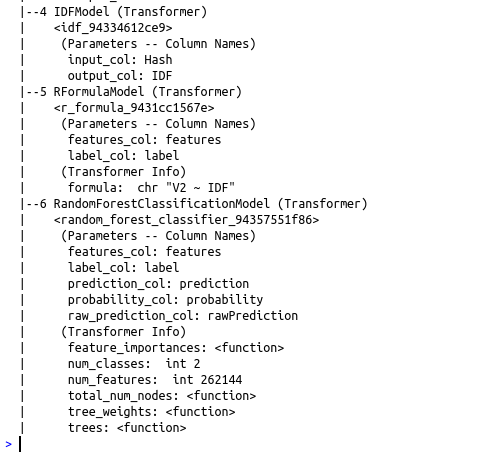

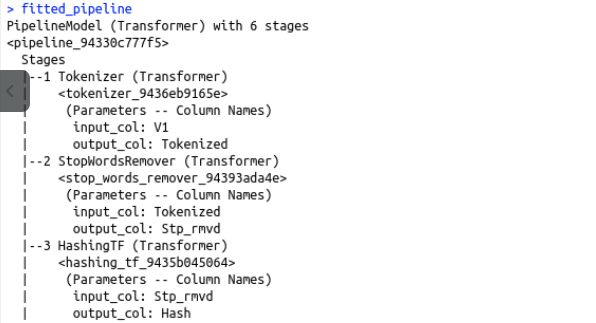

data_amz %

ft_tokenizer(input_col=”V1″,output_col=”Tokenized”) %>%

ft_stop_words_remover(input_col=”Tokenized”,output_col =”Stp_rmvd”)%>%

ft_hashing_tf(input_col = “Stp_rmvd”,output_col = “Hash”)%>%

ft_idf(input_col=”Hash”,output_col=”IDF”)%>%

ft_r_formula(V2~IDF) %>%

ml_random_forest_classifier()

#Split the data for train and test

partitions=sdf_partition(data_amz,training=0.8,test=0.2,seed=111)

train_data=partitions$training

test_data=partitions$test

#Fit the pipeline model

fitted_pipeline fitted_pipeline

#Predict using the test data

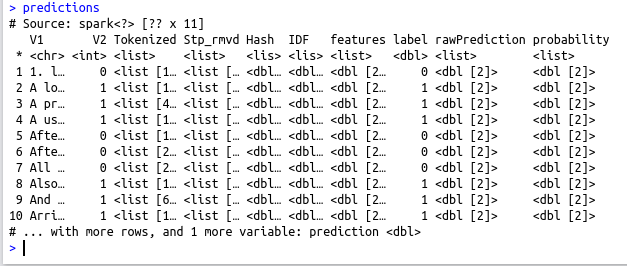

predictions predictions

#Evaluate the metrics AUC

cat(“Area Under Curve : “,ml_binary_classification_evaluator(predictions, label_col = “label”,prediction_col = “prediction”))