Research breakthrough possible @S-Logix

pro@slogix.in

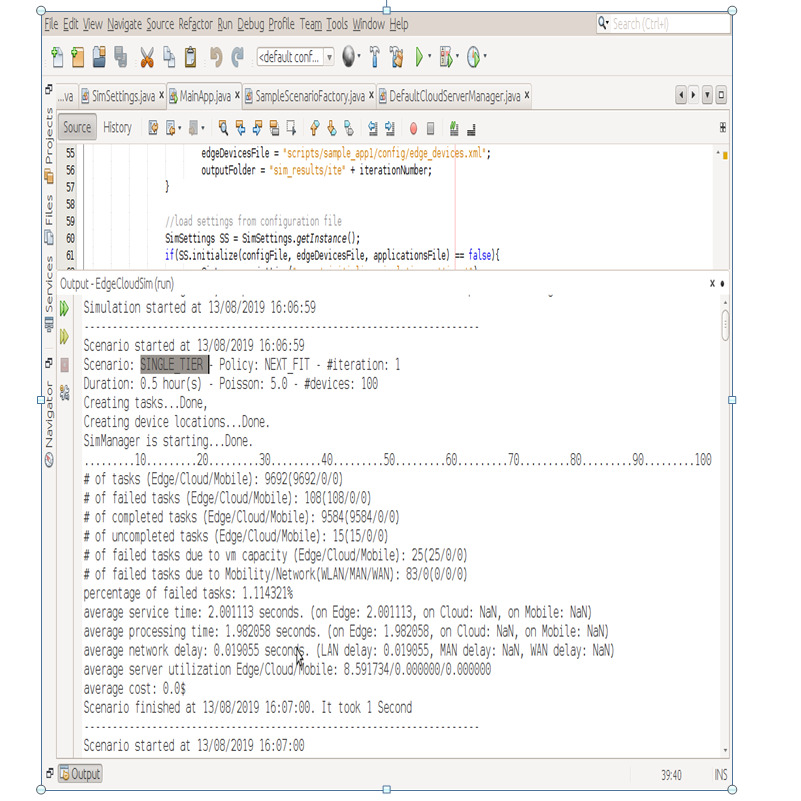

Single-tier – supports to the mobile devices to utilize the edge server located in the same network cell.

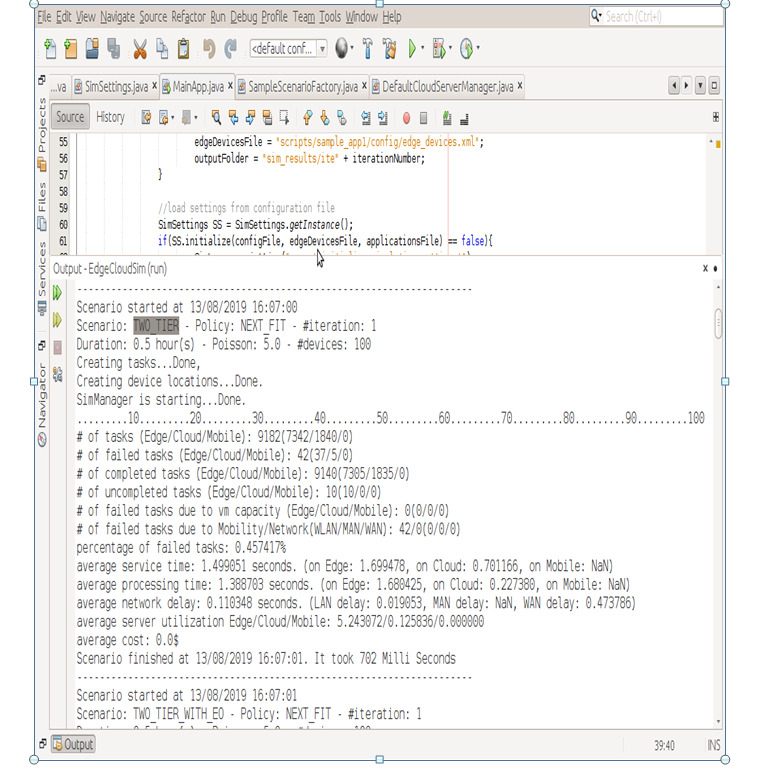

Two-tier – in this architecture, the mobile devices can send their tasks to the global cloud by using WAN connection provided by the connected access point(AP).

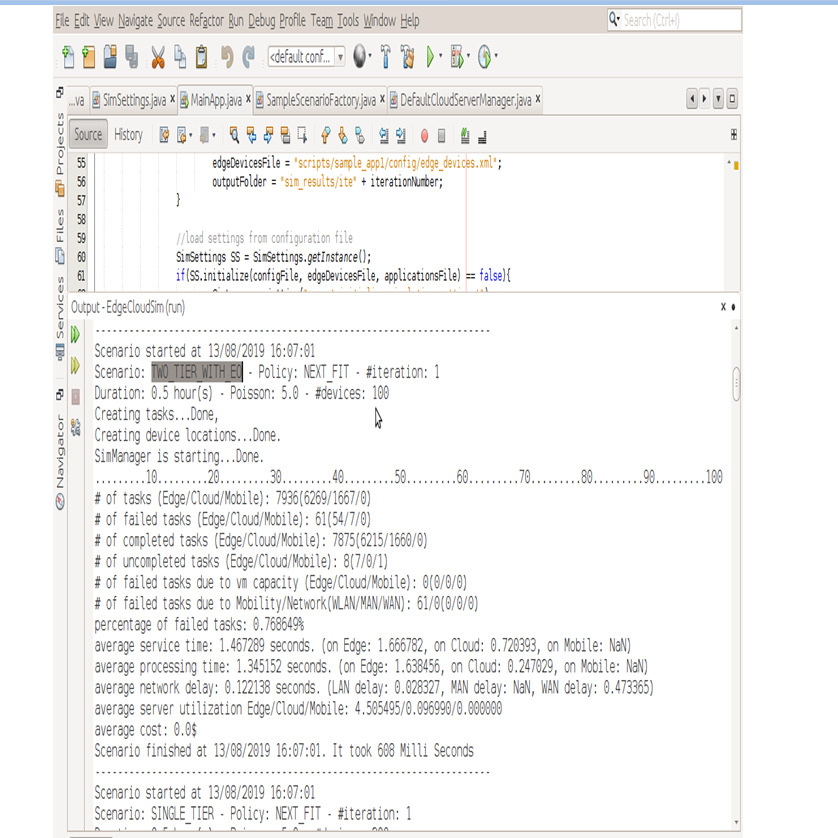

Two-tier with EO – The tasks which are executed on the first tier, only the two-tier with EO architecture can offload the tasks to any edge server located in different places. It assumed that the edge server and the edge orchestrator are connected to the same network.