To predict the loan status like approved or not using keras with deep learning in python?

Loan prediction data set. (kaggle)

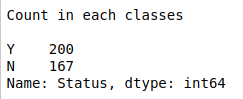

Status of the Loan IDs in the testing set. (approved or not)

Import necessary libraries. (Pandas, Sequential, Dense)

Load the train and test data.

In train data we have pre classified target variable.

Load the test data.

Encode the string data with numeric data.

Build the deep learning model using keras.

Split the data train and test.

Train the model using train data.

Test the model goodness of fit using testing data.

Finally finds the status of Loan IDs in the test data.

#import libraries

import warnings

warnings.filterwarnings(‘ignore’)

import time

import pandas as pd

import numpy as np

from keras.models import Sequential

from keras.layers import Dense

from keras.utils import np_utils

from sklearn.metrics import classification_report, confusion_matrix

#load the train data

train_data = pd.read_csv(‘/home/soft50/soft50/Sathish/practice/loan_prediction/train.csv’)

#load the test data

test_data = pd.read_csv(‘/home/soft50/soft50/Sathish/practice/loan_prediction/test.csv’)

#encode the input variables

train_X = train_data.iloc[:,1:13]

df1 = train_X.apply(lambda s: s.map({k:i for i,k in enumerate(s.unique())}))

frame = [train_data.iloc[:,0],df1]

df1 = pd.concat(frame,axis=1)

#for test data

test_X = test_data.iloc[:,1:12]

df2 = test_X.apply(lambda s: s.map({k:i for i,k in enumerate(s.unique())}))

frame = [test_data.iloc[:,0],df2]

df2 = pd.concat(frame,axis=1)

#Feature selection

X_train = df1.iloc[:,1:12]

y_train = df1.iloc[:,12]

X_test = df2.iloc[:,1:12]

#make dependent variable categorical

y_train = np_utils.to_categorical(y_train,num_classes=2)

#Build the deep learning model

batch_size = 20

#shape of input

n_cols_2 = X_train.shape[1]

#create deep neural networks

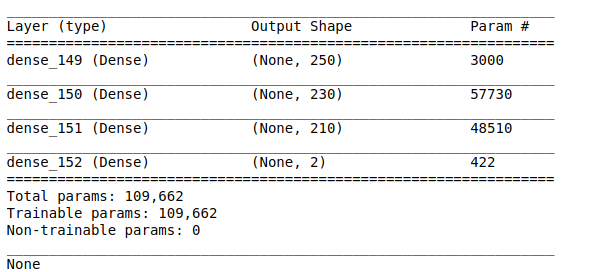

model_2 = Sequential()

#add layers to model

model_2.add(Dense(250, activation=’relu’, input_shape=(n_cols_2,)))

model_2.add(Dense(230, activation=’relu’))

model_2.add(Dense(210, activation=’relu’))

model_2.add(Dense(2, activation=’softmax’))

print(model_2.summary())

#Compile the model

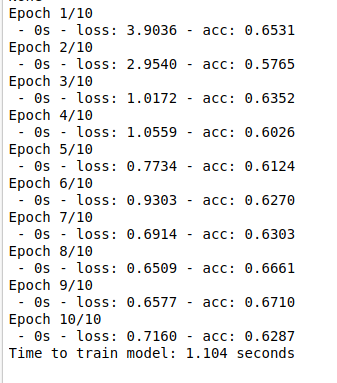

model_2.compile(optimizer=’adam’, loss=’binary_crossentropy’, metrics=[‘accuracy’])

#Here we train the Network.

start_time = time.time()

model_2.fit(X_train, y_train, batch_size = batch_size, epochs = 10, verbose = 2)

end_time = time.time()

elapsed_time = end_time – start_time

print(“Time to train model: %.3f seconds” % elapsed_time)

print(“\n”)

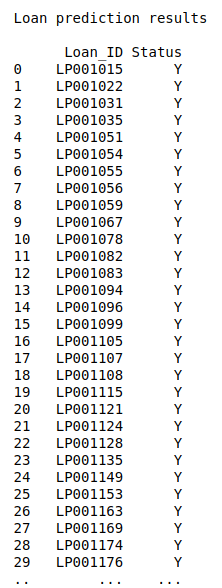

#Predict the test data

prediction = model_2.predict_classes(X_test)

pred_df = pd.DataFrame(prediction)

#Combining the results

final = [test_data.iloc[:,0],pred_df]

df3 = pd.concat(final,axis=1)

#Export as a CSV file

#df3.to_csv(‘/home/soft50/soft50/Sathish/practice/loan_prediction/result.csv’)

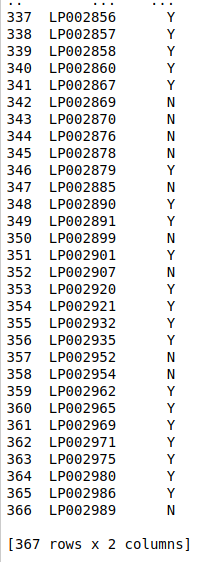

res = pd.read_csv(‘…/loan_prediction/result.csv’)

print(“Loan prediction results\n”)

print(res,”\n”)

print(“Count in each classes\n”)

print(res.iloc[:,1].value_counts())