To calculate the precision, recall from scratch using python.

Iris data set.

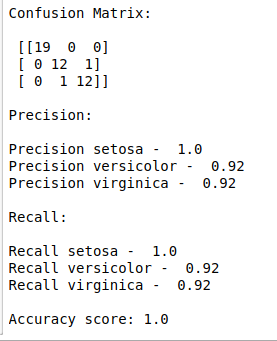

Confusion matrix.

Precision.

Recall.

Accuracy.

Import necessary libraries.

Load the iris data set.

Fix the dependent and independent variables.

Build the naive bayes model for classification task.

Split the data into train and test.

Fit train data into the model.

Predict the test data.

Calculate precision and recall from scratch.

#import libraries

import warnings

warnings.filterwarnings(“ignore”)

import pandas as pd

from sklearn.model_selection import train_test_split

from sklearn.naive_bayes import MultinomialNB

from sklearn.metrics import classification_report, confusion_matrix, accuracy_score

#load data

data = pd.read_csv(‘/home/soft50/soft50/Sathish/practice/iris.csv’)

#check missing values

print(“Checking missing values\n\n”,data.isnull().sum())

#make it as a data frame

df = pd.DataFrame(data)

#print data shape

print(“\n”)

print(“Shape of data\n\n”,df.shape)

#Define X and y variable

X = df.iloc[:,0:4]

y = df.iloc[:,4]

#Split train and test data

X_train, X_test, y_train, y_test = train_test_split(X, y, test_size=0.3, random_state=42)

#training set and testing set

print(“\n”)

print(“Training data set\n\n”,X_train,”\n”,y_train)

print(“\n”)

print(“Testing data set\n\n”,X_test)

#Naive-bayes model

naive_bayes = MultinomialNB()

naive_bayes.fit(X_train,y_train)

#Predict the model

y_pred = naive_bayes.predict(X_test)

#confusion matrix

cm = (confusion_matrix(y_test, y_pred))

print(“\n”)

print(“Confusion Matrix:\n\n”,cm,”\n”)

print(“Precision:\n”)

#Precision calulation from scratch

def precision(cm):

p = (cm[0][0]/((cm[1][0])+(cm[2][0])+(cm[0][0])))

if (str(p) == ‘nan’):

print(“Precision setosa – “,”0.00”)

else:

print(“Precision setosa – “,round(p,2))

precision(cm)

def precision1(cm):

p1 = (cm[1][1]/((cm[0][1])+(cm[1][1])+(cm[2][1])))

if (str(p1) == ‘nan’):

print(“Precision versicolor – “,”0.00”)

else:

print(“Precision versicolor – “,round(p1,2))

precision1(cm)

def precision2(cm):

p2 = (cm[2][2]/((cm[1][2])+(cm[0][2])+(cm[2][2])))

if (str(p2) == ‘nan’):

print(“Precision virginica – “,”0.00”)

else:

print(“Precision virginica – “,round(p2,2),”\n”)

precision2(cm)

#recall calculation

print(“Recall:\n”)

def recall(cm):

p = (cm[0][0]/((cm[0][1])+(cm[0][2])+(cm[0][0])))

if (str(p) == ‘nan’):

print(“Recall setosa – “,”0.00”)

else:

print(“Recall setosa – “,round(p,2))

recall(cm)

def recall1(cm):

p1 = (cm[1][1]/((cm[1][0])+(cm[1][1])+(cm[1][2])))

if (str(p1) == ‘nan’):

print(“Recall versicolor – “,”0.00”)

else:

print(“Recall versicolor – “,round(p1,2))

recall1(cm)

def recall2(cm):

p2 = (cm[2][2]/((cm[2][1])+(cm[2][0])+(cm[2][2])))

if (str(p2) == ‘nan’):

print(“Recall virginica – “,”0.00”)

else:

print(“Recall virginica – “,round(p2,2),”\n”)

recall2(cm)

#Accuracy score

print(“Accuracy score:”,round(accuracy_score(y_test, y_pred),1))