To implement decision tree for regression using Spark with R

#Set up spark home

Sys.setenv(SPARK_HOME=”…../spark-2.4.0-bin-hadoop2.7″)

.libPaths(c(file.path(Sys.getenv(“SPARK_HOME”), “R”, “lib”), .libPaths()))

#Load the library

library(SparkR)

#Initialize the Spark Context

#To run spark in a local node give master=”local”

sc #Start the SparkSQL Context

sqlContext #Load the data set

data = read.df(“file:///…../servo.csv”,”csv”,header = “true”, inferSchema = “true”, na.strings = “NA”)

#Split the data into train and test set

splt_data=randomSplit(data,c(8,2),42)

trainingData=splt_data[[1]]

testData=splt_data[[2]]

xtest=select(testData,”Motor”,”Screw”,”Pgain”,”Vgain”)

ytest=select(testData,”Class”)

#Build the model

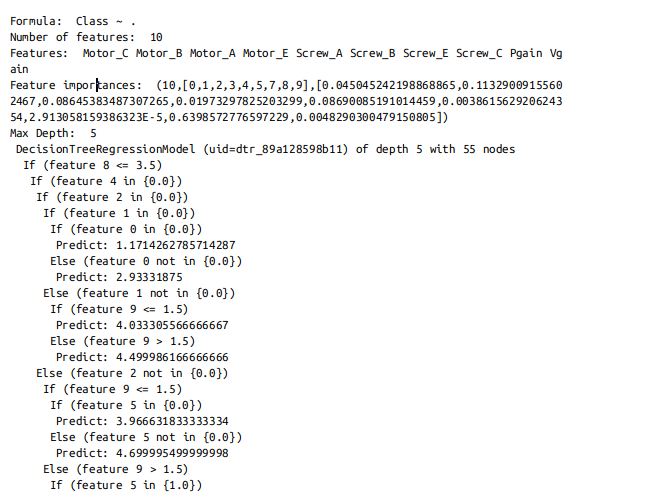

dec_tree summary(dec_tree)

#Predict using the test data

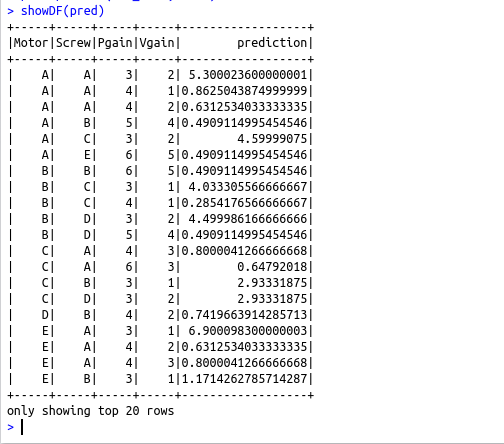

pred=predict(dec_tree,xtest)

showDF(pred)

#Convert the spark data frame to R data frame

y_pred=collect(select(pred,”prediction”),stringsAsFactors=FALSE)

y_true=collect(select(ytest,”Class”),stringsAsFactors=FALSE)

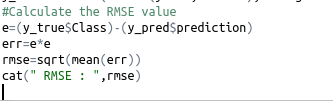

#Calculate the RMSE value

e=(y_true$Class)-(y_pred$prediction)

err=e*e

rmse=sqrt(mean(err))

cat(” RMSE : “,rmse)