To implement frequency pattern mining using Spark with R

#Set up spark home

Sys.setenv(SPARK_HOME=”/…/spark-2.4.0-bin-hadoop2.7″)

.libPaths(c(file.path(Sys.getenv(“SPARK_HOME”), “R”, “lib”), .libPaths()))

#Load the library

library(SparkR)

#Initialize the Spark Context

#To run spark in a local node give master=”local”

sc #Start the SparkSQL Context

sqlContext #Load the data set

data = read.df(“file:///…./GsData.txt”,”csv”,header = “False”, schema = structType(structField(“raw_items”, “string”)), na.strings = “NA”)

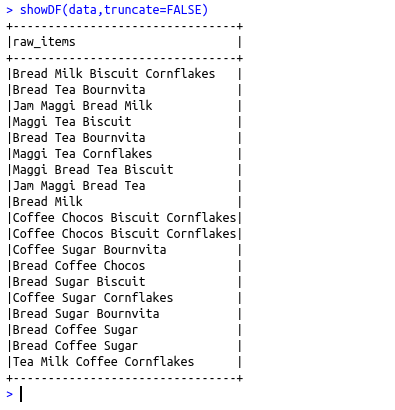

showDF(data,truncate=FALSE)

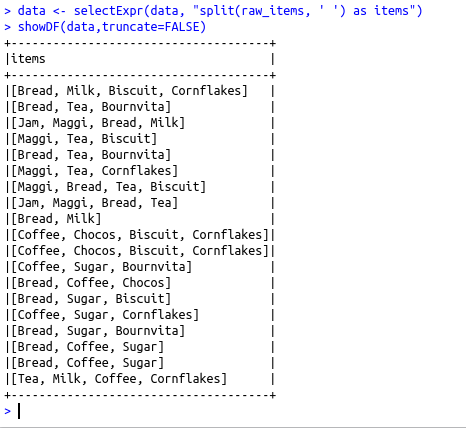

data showDF(data,truncate=FALSE)

model #To get the frequent item sets

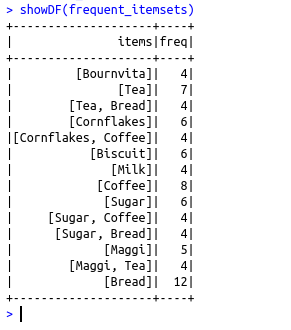

frequent_itemsets showDF(frequent_itemsets)

#To get the association rules

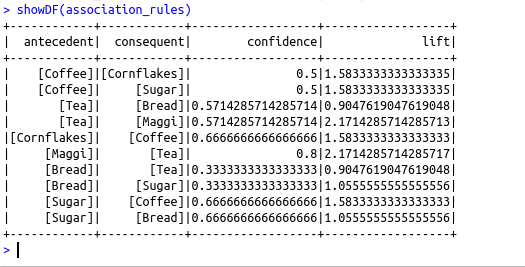

association_rules showDF(association_rules)

# Predict on new data

predop=predict(model,data)

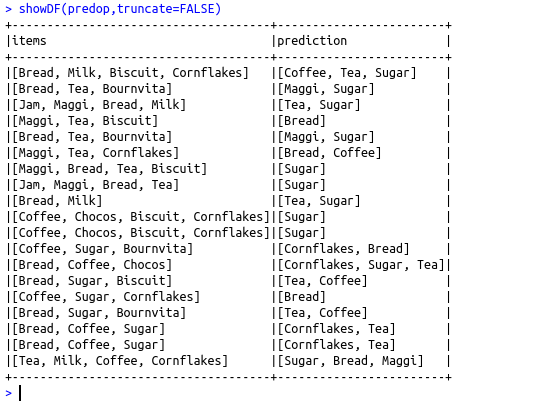

showDF(predop,truncate=FALSE)