To calculate the mean,median,mode of the sample data in java.

The range of a set of data is the difference between the largest and smallest values.

Variance:Variance is a measurement of the spread between numbers in a data set. The variance measures how far each number in the set is from the mean.

Standard deviation:It is a measure that is used to quantify the amount of variation or dispersion of a set of data values.

package Stat;

import java.io.BufferedReader;

import java.io.File;

import java.io.FileNotFoundException;

import java.io.FileReader;

import java.io.IOException;

import java.io.PrintWriter;

import java.util.ArrayList;

import java.util.Arrays;

import java.util.Collections;

import java.util.Scanner;

public class mean {

public static void main(String[] args) throws FileNotFoundException, IOException {

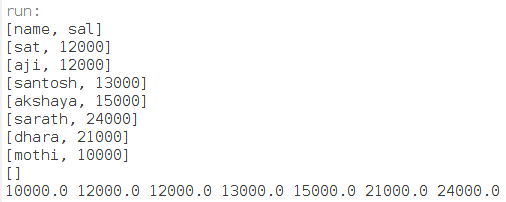

BufferedReader in = new BufferedReader(new FileReader(new File("file.csv")));

PrintWriter out = new PrintWriter(new File("file2.csv"));

File file = new File("file2.csv");

int skipIndex = 1;

String line1;

while ((line1 = in.readLine()) != null) {

String[] parts = line1.split(" ");

System.out.println(Arrays.toString(parts));

StringBuilder outLine = new StringBuilder();

for (int i = 0; i < parts.length; i++) {

if (i + 1 != skipIndex) {

outLine.append(" " + parts[i]);

}

}

out.println(outLine.toString().trim());

}

in.close();

out.close();

String line;

Scanner sc = null;

try {

sc = new Scanner(file);

sc.next();

} catch (FileNotFoundException e) {

System.out.println("File not found");

e.printStackTrace();

return;

}

ArrayList list = new ArrayList();

while (sc.hasNextFloat())

list.add(sc.nextFloat());

int size = list.size();

if (size == 0) {

System.out.println("Empty list");

return;

}

Collections.sort(list);

for (int i = 0; i < size; i++) {

System.out.print(list.get(i) + " ");

}

System.out.println();

// mean value

float sum = 0;

for (float x : list) {

sum += x;

}

float mean = sum / size;

#Range

for(int i = 0; i < list.size(); i++){

Float elementValue = list.get(i);

if(max < elementValue){

max = elementValue;

}

if(elementValue < min){

min = elementValue;

}

}

#variance

double variance;

double m = mean;

double temp = 0;

for(double a :list)

temp += (a-m)*(a-m);

variance= temp/(size-1);

#Standard Deviation

double sd=Math.sqrt(variance);

System.out.println("Range="+ (max – min) + 1);

System.out.println("Variance=" + variance);

System.out.println("Standard deviation=" + sd);

}

}